The Boston Globe

Laptop science main Rona Wang mentioned her expertise exhibits how the rising know-how can have racial blind spots.

Rona Wang isn’t any stranger to utilizing synthetic intelligence.

A latest MIT graduate, Wang, 24, has been experimenting with the number of new AI language and picture instruments which have emerged prior to now few years, and is intrigued by the methods they will typically get issues flawed. She’s even written about her ambivalence towards the know-how on the varsity’s web site.

These days, Wang has been creating LinkedIn profile photos of herself with AI portrait mills, and has acquired some bizarre results like photos of herself with disjointed fingers and distorted facial options.

However final week, the output she bought utilizing one startup’s device stood out from the remaining.

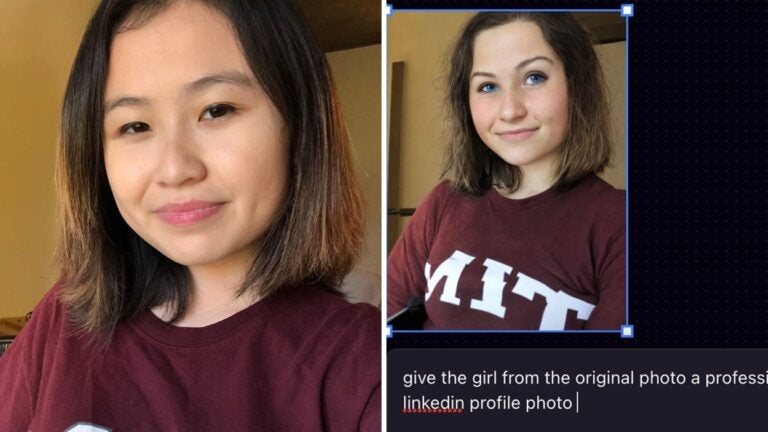

On Friday, Wang uploaded an image of herself smiling and sporting a crimson MIT sweatshirt to a picture creator referred to as Playground AI, and requested it to show the picture into “an expert LinkedIn profile picture.”

In just some seconds, it produced a picture that was almost equivalent to her unique selfie — besides Wang’s look had been modified. It made her complexion seem lighter and her eyes blue, “options that made me look Caucasian,” she mentioned.

“I used to be like, ‘Wow, does this factor suppose I ought to develop into white to develop into extra skilled?’” mentioned Wang, who’s Asian-American.

The picture, which gained traction on-line after Wang shared it on Twitter, has sparked a dialog in regards to the shortcomings of synthetic intelligence instruments in terms of race. It even caught the eye of the corporate’s founder, who mentioned he hoped to resolve the issue.

Now, she thinks her expertise with AI could possibly be a cautionary story for others utilizing comparable know-how or pursuing careers within the discipline.

Wang’s viral tweet got here amid a latest TikTok pattern the place folks have been utilizing AI merchandise to spiff up their LinkedIn profile pictures, creating photos that put them in skilled apparel and corporate-friendly settings with good lighting.

Wang admits that, when she tried utilizing this explicit AI, at first she needed to snigger on the outcomes.

“It was type of humorous,” she mentioned.

Nevertheless it additionally spoke to an issue she’s seen repeatedly with AI instruments, which may generally produce troubling outcomes when customers experiment with them.

To be clear, Wang mentioned, that doesn’t imply the AI know-how is malicious.

“It’s type of offensive,” she mentioned, “however on the identical time I don’t need to leap to conclusions that this AI should be racist.”

Consultants have mentioned that AI bias can exist beneath the floor, a phenomenon that’s been noticed for years. The troves of information used to ship outcomes could not at all times precisely replicate numerous racial and ethnic teams, or could reproduce present racial biases, they’ve mentioned.

Analysis — together with at MIT — has discovered so-called AI bias in language fashions that affiliate sure genders with sure careers, or in oversights that trigger facial recognition instruments to malfunction for folks with darkish pores and skin.

Wang, who double-majored in arithmetic and pc science and is returning to MIT within the fall for a graduate program, mentioned her broadly shared picture could have simply been a blip, and it’s doable this system randomly generated the facial options of a white lady. Or, she mentioned, it could have been skilled utilizing a batch of pictures by which a majority of individuals depicted on LinkedIn or in “skilled” scenes had been white.

It has made her take into consideration the doable penalties of an identical misstep in a higher-stakes situation, like if an organization used an AI device to pick out essentially the most “skilled” candidates for a job, and if it will lean towards individuals who appeared white.

“I undoubtedly suppose it’s an issue,” Wang mentioned. “I hope people who find themselves making software program are conscious of those biases and excited about methods to mitigate them.”

The folks accountable for this system had been fast to reply.

Simply two hours after she tweeted her picture, Playground AI founder Suhail Doshi replied directly to Wang on Twitter.

“The fashions aren’t instructable like that so it’ll decide any generic factor based mostly on the immediate. Sadly, they’re not good sufficient,” he wrote in response to Wang’s tweet.

“Joyful that will help you get a end result but it surely takes a bit extra effort than one thing like ChatGPT,” he added, referring to the favored AI chatbot which produces massive batches of textual content in seconds with easy instructions. “[For what it’s worth], we’re fairly displeased with this and hope to resolve it.”

In further tweets, Doshi mentioned Playground AI doesn’t “help the use-case of AI picture avatars” and that it “undoubtedly can’t protect identification of a face and restylize it or match it into one other scene like” Wang had hoped.

Reached by e-mail, Doshi declined to be interviewed.

As a substitute, he replied to a listing of questions with a query of his personal: “If I roll a cube simply as soon as and get the number one, does that imply I’ll at all times get the primary? Ought to I conclude based mostly on a single commentary that the cube is biased to the number one and was skilled to be predisposed to rolling a 1?”

Wang mentioned she hopes her expertise serves as a reminder that though AI instruments have gotten more and more well-liked, it will be clever for folks to tread rigorously when utilizing them.

“There’s a tradition of some folks actually placing a number of belief in AI and counting on it,” she mentioned. “So I feel it’s nice to get folks excited about this, particularly individuals who may need thought AI bias was a factor of the previous.”